How to Tune a NAS for Direct-from-Server Editing of 5K Video

At 45 Drives, we make really large capacity storage servers. When we first started out, our machines were relatively slow, and focused on cold-to-lukewarm storage applications; but our users pushed us to achieve more performance and reliability. This drove the evolution of our direct-wired architecture, which delivers enterprise level speed (read/write in excess of 3 Gigabytes/second) and reliability, without losing the simplicity and price point.

All this extra speed made our Storinator servers highly suitable for a number of new applications, one of which is video editing. This field has seen its storage needs explode in recent years, and hugely benefit from our incredible capacity and density. Organizations have used them to centralize their storage, but the speed has opened up a whole new opportunity, namely to edit directly from the central server, rather than download and work off of internal hard drives. This saves time formerly required for transferring files to and from the workstation, but also can take place at a performance level higher than can be achieved from a single local drive (mechanical or SSD).

However, to get performance necessary for direct-from-server editing, high speed connectivity is required. Fortunately, 10GbE networking has recently become affordable, and has the speed necessary for this task, provided you know how to harness it! Out of the box, most NAS software will not achieve these speeds. However, with some tuning you can achieve this holy grail of faster-than-local access and editing, at resolutions up to 5K, directly from centralized storage!

And a final point before I get started: in video editing, ‘fast access’ means something very specific, namely large file sequential transfer speeds from server to a single workstation. This is different from the more common task of maximizing aggregate throughput of smaller files to a large number of workstations. So in this blog, I’m going to focus on how to maximize transfer speed from NAS to a single workstation, with the goal of approaching saturation of a 10GbE line.

About Speed

In much of the video post production world, files tend to be stored locally on editing workstations for performance reasons. Today’s defacto performance standard is the internal Solid State Drive (SSD), which can transfer data at ~400 – 500 MegaBytes per second (MB/s)); faster than mechanical drives (~150 MB/s).

At most video editing workstations, editing from an internal SSD feels ‘snappy,’ but our Storinator machines are technically capable of transferring multiple GigaBytes per second. This is several times faster than an internal SSD, so working from a central storage server sounds really exciting! However, you have to consider connectivity, which, unless you have an exotic network, will throttle transfers to your workstation at a fraction of what the server can actually perform. Here’s realistic maximum transfer speeds:

| Link Speed (10Gbit/s) | Realistic Max Throughput (MB/s) |

| 1 Gigabit Network | 125 MB/s |

| 10 Gigabit Network | 1100 MB/s |

So, if you’ve moved up to a 10 Gigabit Ethernet (GbE) network (which is now both simple and affordable), then it is possible to achieve file transfers from a central large NAS server that are on the order of 2 x faster than an internal SSD. This would make it practical and advantageous to edit directly off of a central server, at increased performance levels, with the security of RAID, and without the overhead of transferring files before and after editing. With servers such as ours, this is real and achievable, and delivers a better and more productive experience at work station. But to achieve this performance gain in video editing, you must be able to achieve single client transfers at a speed that approaches saturation of the 10GbE connection.

Beware of Disappointment

Typically, if one sets up a 10GbE network, and connects a Storinator running NAS or Server OS to a fast workstation, one will see out-of-the-box transfer speeds on the order 400 – 500 MB/s. In a lot of ways, this is exciting — they’ve achieved consolidation of storage and what some call ‘eternally online status’ for their data, at speeds faster than an internal mechanical drive, and in fact on the order of an internal SSD.

However, the ideal is to saturate that 10GbE connection (~1.1 GB/s), so the out-of-box performance can be slightly disappointing for us perfectionists (not a bad way to be ‘disappointed’ really, I suppose).

The Holy Grail – Smokin’ fast single client transfers from massive centralized storage

In working with our users, it has become clear that the “Holy Grail” of media storage in video editing is centralized storage all users can access, at speeds that are greater than what internal SSDs are capable of. This allows Video Editors to work directly from the server, while resting assured all their data is safe and secure on a redundant RAID array.

This can be achieved with a Storinator Massive Storage Pod, 10GbE network, and a fast workstation with plenty of RAM, but to really get the most out of this setup, you need to tune things to move from ‘out of the box’ performance up to single client transfers that saturates 10GbE.

The following examples show how to configure your NAS and client computers to achieve maximum performance in a wide range of setups. With the proper understanding of how to set up your storage network, we believe our hardware can provide you this “Holy Grail” all video producers dream about.

EXAMPLES

I’ve provided several examples below for various hardware and NAS software combinations. However, we can achieve similar single-client transfer performance from any of our hardware, running a variety of OS’s, including server distros of Linux, such as CentOS, FreeBSD, and the new and exciting RockStor NAS software (which runs over Linux, similar to how FreeNAS runs over FreeBSD).

System Description

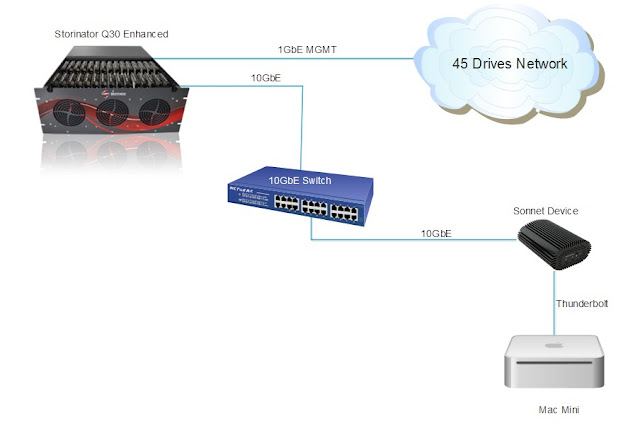

Storinator Q30 Enhanced, running FreeNAS 9.10 NAS Appliance (over FreeBSD):

Q30 Specifications

CPU = E5-2620

RAM = 32GB ECC DDR4 (4x 8GB)

HBA = Highpoint R750

Motherboard = Supermicro X10-SRL

NIC = Intel X540-AT2

HDD = 6TB WD Re

Switch = Netgear Prosafe XS708E

Preface, Tuning:

In the examples below, I am going to show how to get the maximum single client throughput using both Mac OSX and Windows clients.

To do this, we have to tweak a few settings on both the client OS and the host NAS. In an attempt to keep everything neat and tidy I will present the tuning settings that are constant across all examples first.

Jumbo Frames

You will want to turn on jumbo frames on your server and on the workstations attached via 10GbE. Here is a quick “how-to” on the OS’s I will discuss below.

FreeNAS

In the FreeNAS WebGUI, navigate to the “Network->Interfaces tab”, select your 10GbE interface choose to “Edit” and in the “Options” box put “mtu 9000”.

OSX

Open “System Preferences” and click on “Network”. Select your 10GbE/Thunderbolt port connected to the NAS and click on “Advanced”. On the window that pops up, navigate to the “Ethernet Tab”. Here you want to fill out the information like so:

Windows

Navigate to “Control Panel -> Network and Internet -> Network and Sharing Center”. Click on your 10GbE interface, hit “Properties” and then “Configure”. In the window that pops up, navigate to the “Advanced Tab” under the “Jumbo Packet” set it from “Disabled” to “9014 Bytes.”

NAS NIC Tuning

FreeNAS is built on the FreeBSD kernel and therefore is pretty fast by default, however the default settings appear to be selected to give ideal performance on Gigabit or slower hardware.

Since we are using 10GbE hardware, some settings need to be tuned.

By setting the following tunables on the FreeNAS host you will see a significant improvement in network throughput. This is done by navigating to “System-> Tunables”. These settings take effect immediately, no reboot required.

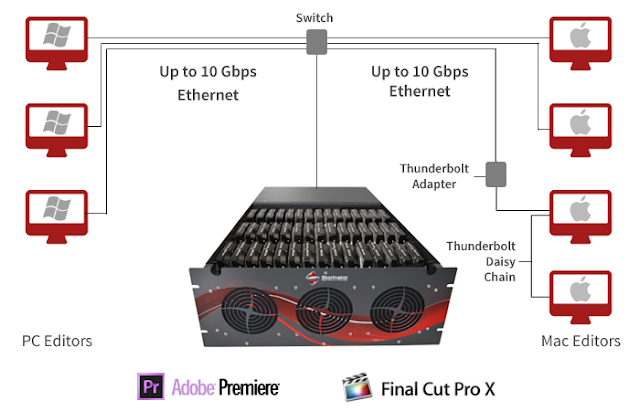

Example 1 – Mac OSX Clients, SMB & AFP

Lets start in the Mac world. I have created a 30 drive RAIDZ2 (3 VDEVs of 10 drives) out of 6TB WD Re drives on my NAS, and have a Mac Mini client wanting to read and write files from the NAS as fast as possible.

Since I cannot add a 10GbE card to the Mac, I am limited to the built in thunderbolt interface, and therefore need to use a 10GbE-to-thunderbolt converter. I chose to use a Sonnet Twin10G for a couple reasons: it is relatively low cost compared to alternatives, and has a bunch of nice features including support for Link Aggregation, and really simplified set-up.

Below is a diagram of my network. The set-up in this scenario is quite painless, simply plug and play (minus the Sonnet driver on the client end).

I then created a simple AFP share, mounted it on my Mac client, and measured network throughput using Blackmagic Disk Speed Test (it’s free on the app store). The results are below, and as you can see, out of the box transfer speeds are quite fast.

So when using a Mac client via 10 GbE / Sonnet / Thunderbolt, no tuning besides jumbo frames is required; provided you use an AFP share.

For those editing shops that have both OSX and Windows clients needing access to a centralized NAS, you can use a SMB share to include everybody. Although a little less than the AFP share, speeds are still pretty good.

This is great news for editors using Adobe Premiere because unlike its competitor, Final Cut Pro X (FCPX), Premiere supports network drives of any protocol, including AFP and SMB.

Example 2: Mac OSX Client, NFS (Final Cut ProX Support)

For those using Mac video editing workstations running Apple’s Final Cut Pro X (FCPX), you may already be aware but it only supports local storage devices and SANs. At first glance this seems to severely limit the options of high density, high performance network storage to expensive SAN based solutions. But we’ve found how to tune our NAS solution to get faster-than-internal-SSD speeds.

Using a NFS share allows the system to use the mounted network drive as a local device, which will allow it to work with FCPX. Using the same setup but NFS rather than AFP/SMB, you can see below that out of the box, while respectable, is not as fast as we would like it to be.

There is good news, however…we can tune it to achieve significant performance gains.

The default NFS mount settings in OSX do not include the necessary options for maximum throughput nor do they allow for the proper locking of files that FCPX requires. To enable your NFS mounts to use these settings you will need to add the following lines to the file “/etc/nfs.conf”.

nfs.client.mount.options=nfssvers=3,tcp,async,locallocks,rw,rdirplus,rwsize=65536

nfs.client.allow_async=1

I did this by opening up a terminal and using “nano” to edit the nfs.conf file…

sudo nano /etc/nfs.conf

After applying these settings, I simply unmounted the share and remounted through “Go -> Connect To Server” and I doubled my throughput (2.16 times to be exact)!

This info was gathered from this informative white-paper on the topic of interfacing FCPX and NFS shares authored by EMC.

While this is not full line speed nor as high as the transfer speeds we were getting with an AFP share, this is noticeably faster than an internal SSD, sufficient to allow FCPX editing shops directly from a central NAS with performance better than an internal SSD.

Example 3: Windows Client, SMB

For those of you editing in Windows, have no fear, we have you covered as well. Here I will show you how I shared my volume via SMB to a Windows 10 client.

This time, however, since my client is now a Windows 10 PC, I don’t need my Sonnet device any more, and instead plugged a 10GbE Intel X540-T2 NIC into my system.

To get optimal speeds two things need to be set on the client side. First, set jumbo frames, then increase the transmit and receive buffers to the allowed maximums.

To do this you should first download the latest driver package (v20.7) for the Intel 10GbE NIC drivers.

Once installed, navigate to “Control Panel -> Network and Internet -> Network and Sharing Center”. Click on your 10GbE interface, hit properties and then configure. You want to increase the Receive and Transmit Buffers as high as they will go. Receive will be 4096 and Transmit will be 16384.

With the host and the client tuned for optimal transfers, we are ready to test it out.

Since my windows machine has a lot of RAM, I decided to create a 20GB RAM disk as a super fast drive to test the read and write speeds of my NAS.

Below is a transfer of a 18GB folder from the RAM disk to the NAS.

It showcases the write speed of the NAS. The NAS drive is called “NASa” and the “A:” drive is the 20GB RAM disk.

You can see that write speeds are saturating the 10GbE connection at ~1.1 GBytes/s. This is reiterated in the network throughput trace in the bottom right corner.

Here is a transfer of the same file from the NAS to the RAMdisk.

This showcases the read speed of the NAS.

Although ~100MB/s less than the write speeds, the read speeds are pretty much saturating the 10gbe connection as well.

Conclusion

Having massive storage and high throughput in a centralized NAS can offer huge benefits to Video Editing Studios. Editing can take place directly from files on the server, without downloading to local workstations. From our examples you can see that regardless if you are editing on Windows or Mac you can achieve single client transfer throughput from the centralized server, that approach the “holy grail” of speeds greater than an internal SSD.

Mac shops running Adobe Premiere or Final Cut Pro X can take advantage of a NAS via Thunderbolt, without having to install 10GbE hardware.

Mac users running Premiere are plug and play with AFP & SMB shares, which are lightning fast, nearly saturating the 10GbE to Sonnet line.

Final Cut Pro X users are limited due to Apple’s FCPX architecture which is designed to work solely with their software (XSAN), and therefore hardware. NFS shares offer a solid workaround however, you need to tune to get the maximum single client throughput, which meets our ‘holy grail’ criterion.

Windows shops are limited to SMB shares, but with a little tuning you can have your NAS primed to handle even the toughest workload at full line speeds.